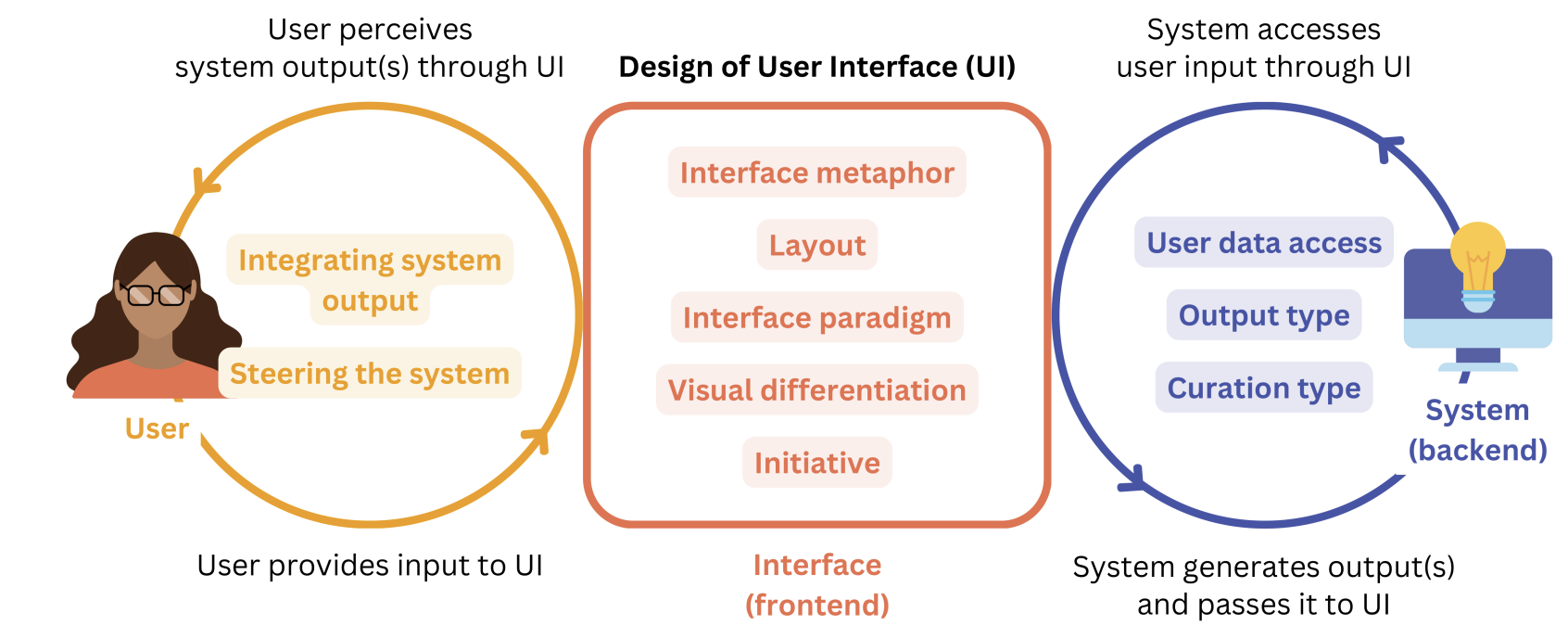

Hello everyone! I am Disha Shrivastava, a Senior Research Scientist at Google DeepMind, London. I am a core-contributor to the Gemini project focused on boosting its coding and reasoning abilities through post-training recipes, data, and evals. In addition, I am actively developing general-purpose agents designed to achieve superhuman performance in critical environments for humanity. Notably, I played a key role in developing Jules, an asynchronous coding agent. My interests also extend to designing better human-agent interfaces.

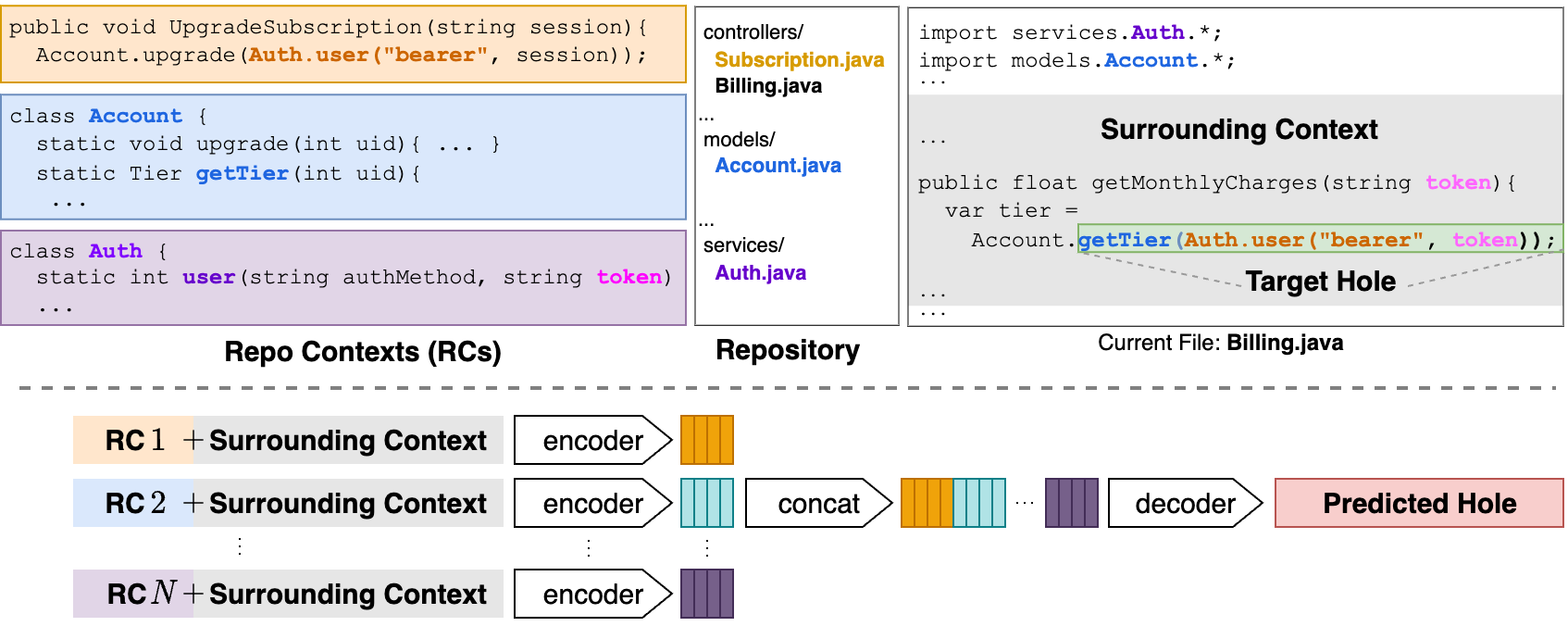

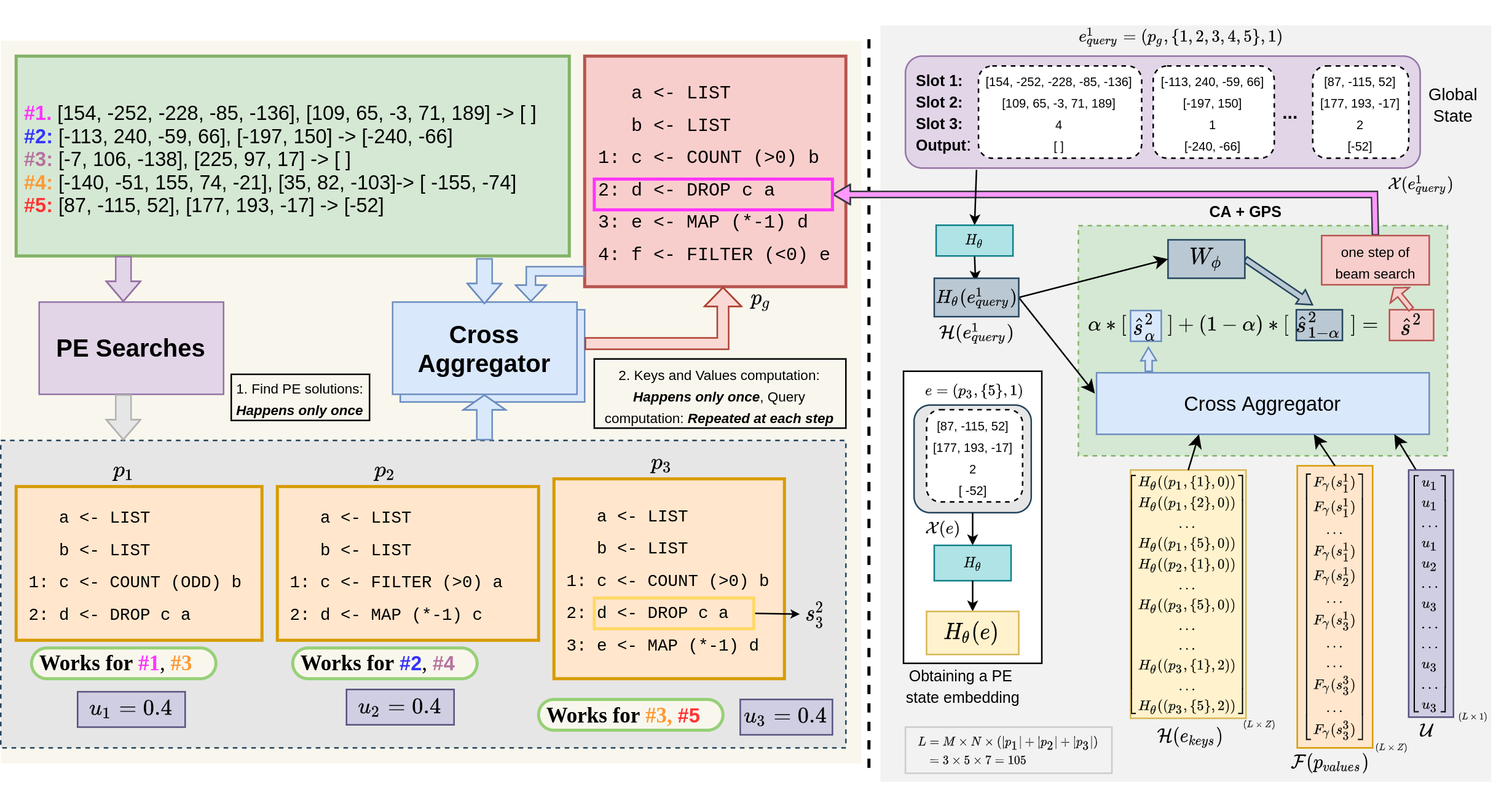

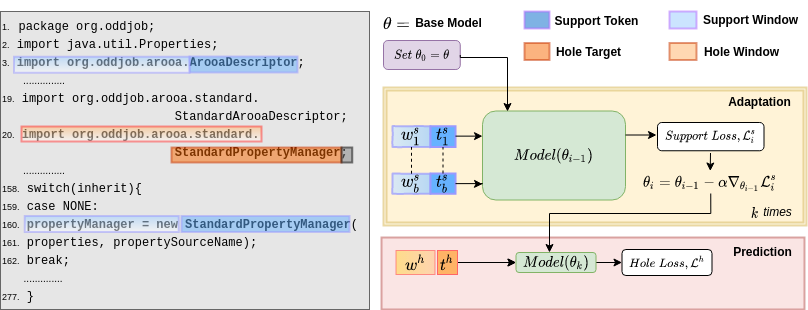

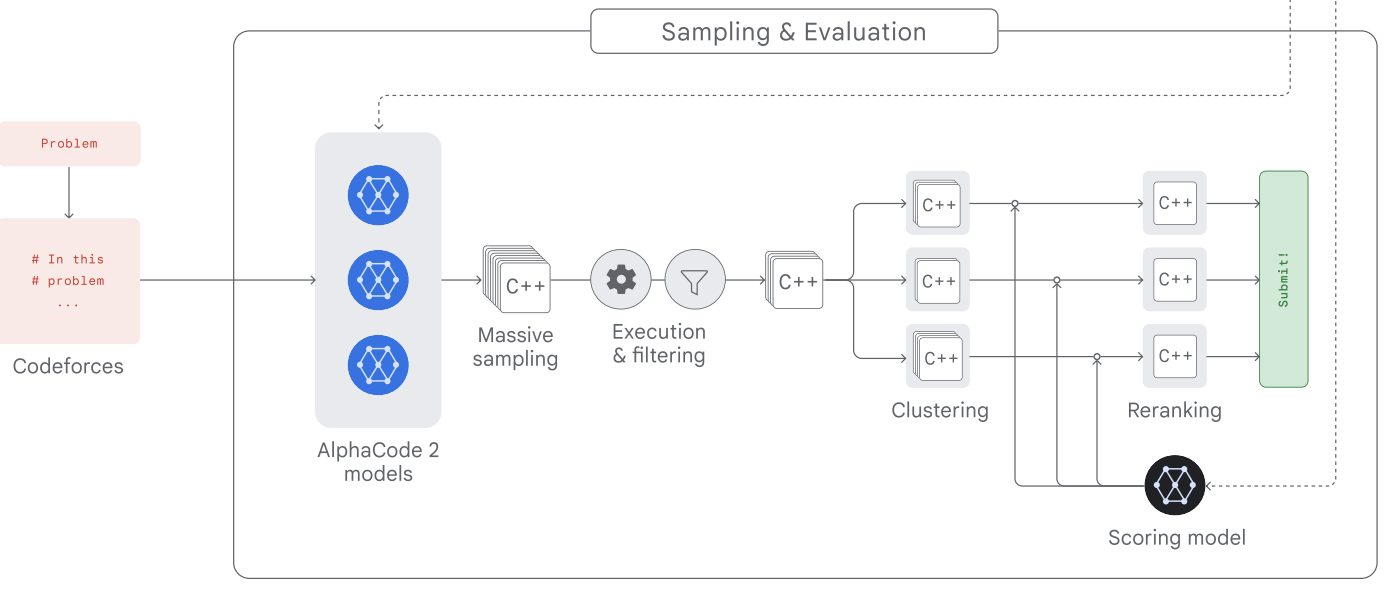

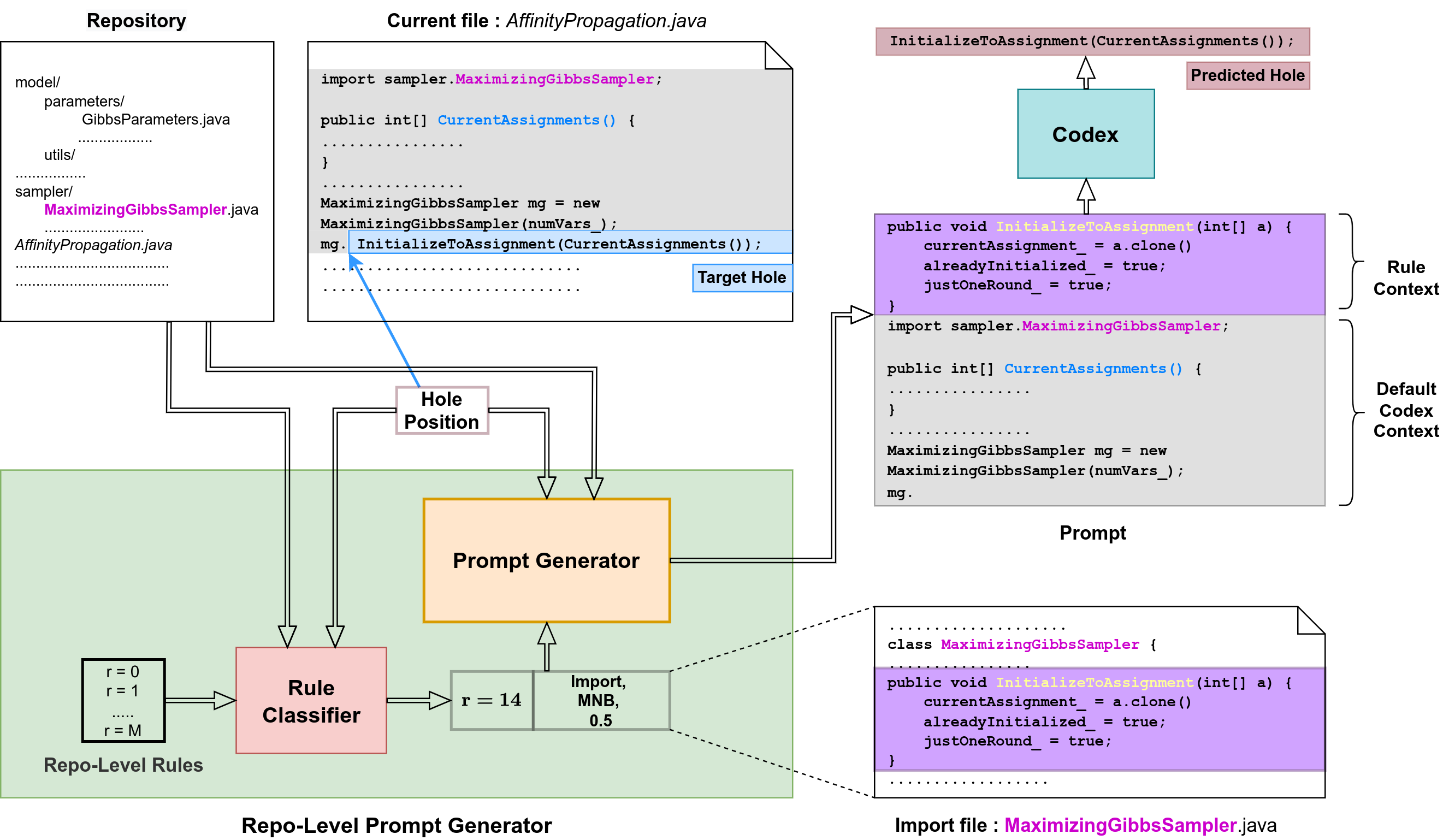

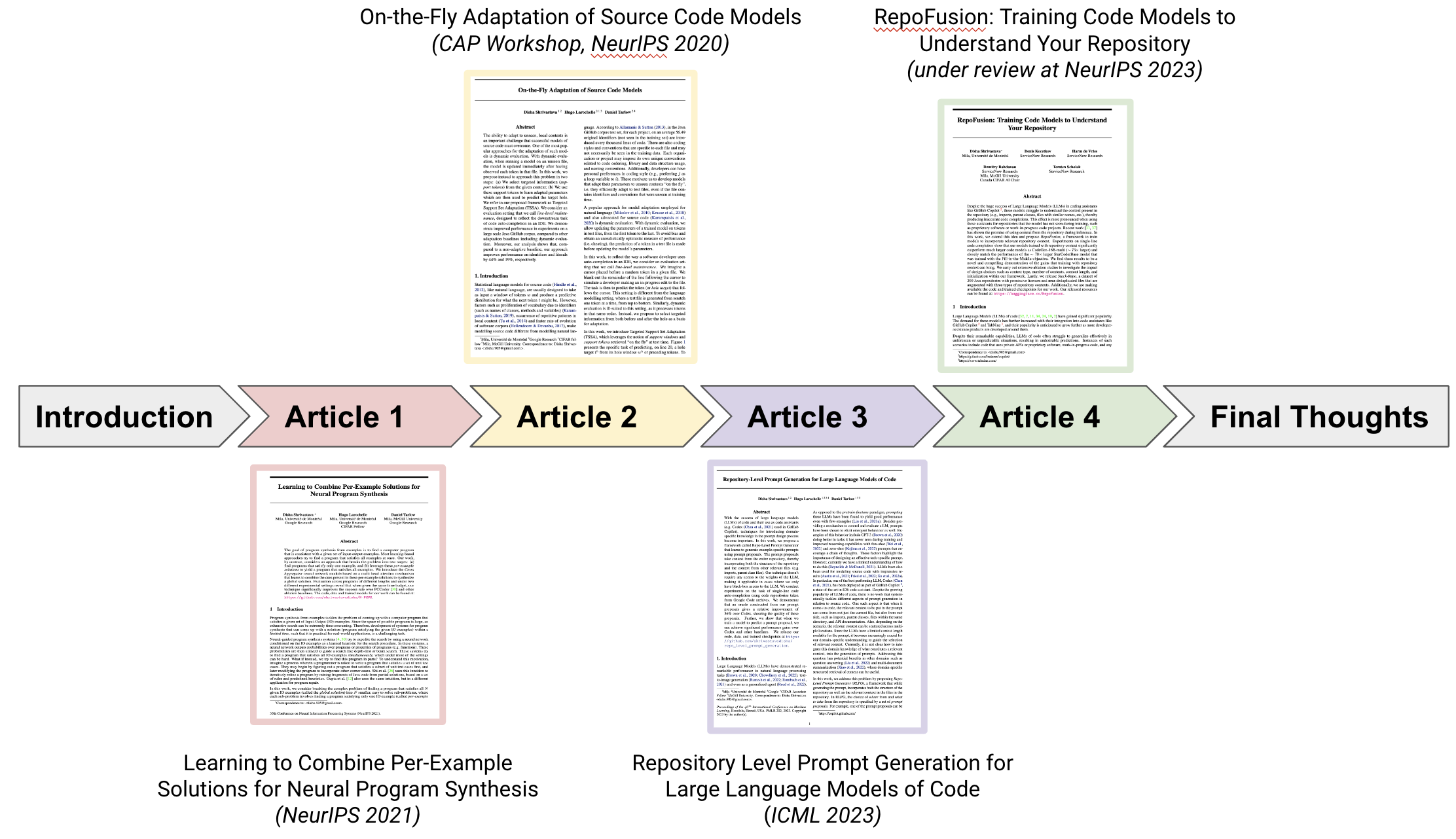

I did my PhD in AI at Mila, working with Hugo Larochelle and Danny Tarlow. My thesis focused on developing novel methods to identify and leverage contextual cues to improve deep learning models of code. During my PhD, I also worked as a Student Researcher at Google Brain, as a Research Scientist Intern at DeepMind working on AlphaCode, and as a Visiting Researcher at ServiceNow Research.

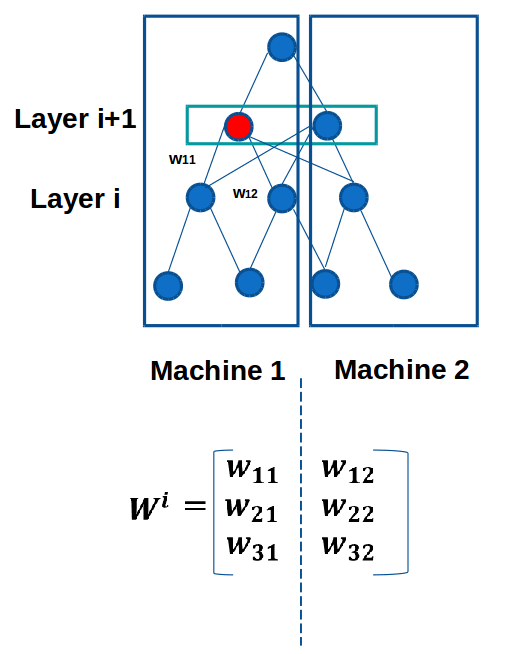

Before my PhD, I was a Research Software Engineer at IBM Research, India where I worked on unsupervised knowledge graph construction, computational creativity metrics, and reasoning for maths question-answering. I hold a Masters in Computer Technology from IIT Delhi, where I developed a data and model-parallel framework for training of deep networks in Apache Spark. My Bachelors degree is in Electronics and Communication Engineering from BIT Mesra.

I co-organized workshops on Deep Learning for Code (ICLR 2022-23), AIPLANS (NeurIPS 2021) and Neurosymbolic Generative Models (ICLR 2023). Outside of work, I like travelling, cooking, reading books, singing and blogging!

Selected Publications